In this scenario we’ll begin a service locally and scan it on the localhost handle. You can use this strategy to scan your personal software within the Bitbucket Pipelines construct surroundings. It is possible to begin a pipelines service container manually to evaluate the beginning sequence.

The very first thing to do is to navigate over to your repository and choose Pipelines in Bitbucket. From there, click Create your first pipeline, which can then scroll down to the template part. If you have to simplify the development process on your software program group and wish a dependable solution, it’s a fantastic possibility. Sometimes we need to set up some extra dependencies; at that time, we will use the next command. With Bitbucket Pipelines, you possibly can rapidly embrace a consistent becoming a member of or steady conveyance work process for your vaults. A fundamental piece of this interaction is to transform handbook cycles into scripts that might be run mechanized by machines with out the requirement for human intercession.

We hope from this article you study more about the bitbucket pipelines. From the above article, we now have taken in the essential thought of the bitbucket pipelines and see the illustration and instance of the bitbucket pipelines. Furthermore, this article taught us how and when to use the bitbucket pipelines.

Pricing plans can be found for startups, small/medium businesses, and enormous enterprises too. Enterprise capabilities with additional features and premium support can be found for organizations with 2,000 or more employees. But what about should you need more build minutes however have run out of your month-to-month limit?

What’s extra, using the service provides fast suggestions loops as a result of the event workflow is managed, in its entirety, inside Bitbucket’s Cloud. Everything is taken care of from code right by way of to deployment in one place. The construct standing is displayed on all commits, pull requests, and branches, and you may see exactly the place a command could have damaged your latest build.

Monitor Your Pipelines

Bitbucket Pipelines helps caching build dependencies and directories, enabling sooner builds and reducing the number of consumed build minutes. Services are outlined in the definitions section of the bitbucket-pipelines.yml file. We do not cost for cloud application accounts that are not tied to staff.

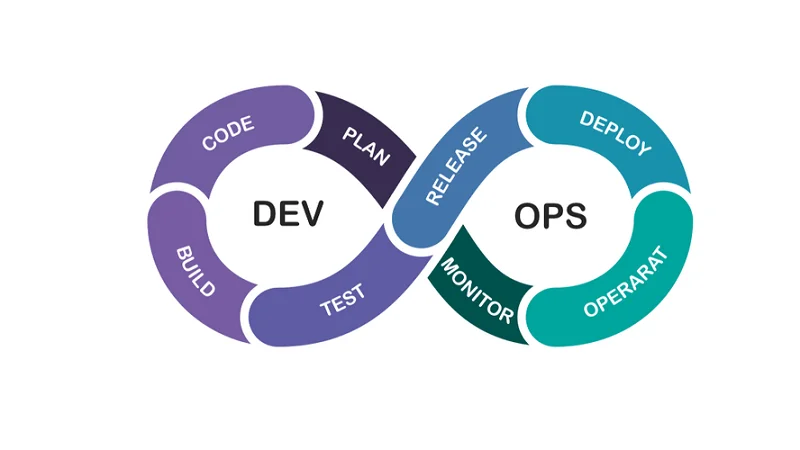

Note that we need not declare Docker as a service inside our Bitbucket pipeline as a outcome of it is among the default services. Underneath the hood, that is mounting the docker CLI into the container running our pipeline, allowing us to run any docker command we would like inside our pipeline. When testing with a database, we recommend that you simply use service containers to run database companies in a linked container. Docker has numerous official pictures of in style databases on Docker Hub. If a service has been outlined in the ‘definitions’ section of the bitbucket-pipelines.yml file, you probably can reference that service in any of your pipeline steps. More simply, it’s a set of finest practices and methodology that helps groups notice their business targets while maintaining a good level of security and high code quality.

Assist Us Continuously Improve

Allowed child properties — Requires one or more of the step, stage, or parallel properties. In the next tutorial you’ll learn to outline a service and how to use it in a pipeline. However, should you need full control over integrating your Bitbucket Pipeline with Octopus, the pre-configured CLI Docker image https://www.globalcloudteam.com/ is the recommended methodology to try this. The octopus-cli-run Bitbucket Pipe is currently experimental. As Bitbucket Pipelines is only out there as a cloud providing, your Octopus Server have to be accessible over the Internet.

Depot provides a drop-in alternative for docker construct that lets you work round these limitations. The docker cache allows us to leverage the Docker layer cache throughout builds. In this submit, we’ll have a look at how to build Docker pictures in Bitbucket Pipelines. Then we’ll briefly introduce how Depot eliminates those limitations. Everything about it really works great besides that I want a method to cross a command line argument to the victoria-metrics container on the end of the file. Over time, builders might end up with the same steps copied & pasted between pipelines and repositories, which can turn out to be burdensome to handle, and add to the runtime of pipelines.

Databases And Service Containers

Some limitations finally inhibit our capacity to construct Docker images as rapidly as potential or construct for different architectures we want to help. However, there are workarounds, and never everyone needs to construct multi-platform pictures, so it works for some use circumstances. By swapping docker construct for depot construct in our Bitbucket Pipeline, we get a whole native BuildKit environment for both Intel & Arm CPUs and built-in persistent caching on quick NVMe SSDs. These limitations don’t stop us from constructing a Docker picture, but they do prevent us from building a Docker image quickly.

- However, so as to get the database service began correctly, I have to cross an argument to be acquired by the database container’s ENTRYPOINT.

- Secrets and login credentials ought to be stored as user-defined pipeline variables to avoid being leaked.

- If you want to configure the underlying database engine further, refer to the official Docker Hub image for details.

- You define these extra providers (and different resources) within the definitions section of the bitbucket-pipelines.yml file.

- Store and manage your build configurations in a single bitbucket-pipelines.yml file.

Add the docker-compose-base.yml Docker Compose configuration file to your repo. Commit your code and push it to Bitbucket to initiate a pipeline run. You can watch your scan progress in Bitbucket, and verify bitbucket pipeline services the StackHawk Scans console to see your results.

There are several actions that could trigger this block together with submitting a certain word or phrase, a SQL command or malformed data. In this case each the build step and the Redis service will require 1 GB memory. We can enable a Docker cache in Bitbucket Pipeline by specifying the cache choice in our config file.

Bitbucket Pipelines can create separate Docker containers for services, which leads to quicker builds, and simple service editing. For particulars on creating services see Databases and repair containers. This providers possibility is used to outline the service, allowing it for use in a pipeline step. I am attempting to set up a bitbucket pipeline that uses a database service offered by a docker container. However, to be able to get the database service began correctly, I have to pass an argument to be received by the database container’s ENTRYPOINT.

Attaching A Service To The Setup #

The good news is you could improve or top up your minutes through what’s known as “build packs.” You can purchase build packs that add an extra one thousand construct minutes in $10 increments. Once a credit card is saved, Bitbucket will mechanically improve your minutes if you run over too. On top of that, by adding a few traces to your Pipelines builds configuration, you can also scan dependencies for vulnerabilities routinely. An distinctive pipeline that abruptly spikes in demand for pull demands started from inside your archive.

Octopus Deploy shall be used to take those packages and to push them to growth, test, and manufacturing environments. Nira’s Cloud Document Security system offers full visibility of internal and external entry to company documents. Organizations get a single source of truth combining metadata from multiple APIs to offer one place to manage entry for every doc that staff contact. Nira currently works with Google Workplace, Microsoft 365, and Slack.

By clicking “Post Your Answer”, you agree to our phrases of service and acknowledge that you have learn and understand our privacy coverage and code of conduct. Since HawkScan might be probing many URLs on nginx-test, logging would generate extreme output in your pipeline results. If you don’t have one already, create a brand new Bitbucket account. Then create a model new repository to include the configurations for the examples below. Pipelines pricing is predicated on how long your builds take to run.

A service is one other container that is started earlier than the step script using host networking each for the service as well as for the pipeline step container. This example bitbucket-pipelines.yml file exhibits both the definition of a service and its use in a pipeline step. With Bitbucket Pipelines, you could get began straight away without the necessity for a prolonged setup; there’s no need to switch between multiple tools.